The Panda Challenge

How organizing a competition and involving the public improves the development of AI algorithms.

Even though the name of this paper suggests pandas were involved, it really was a histopathological competition in developing the best possible AI algorithm for detecting prostate cancer. The PANDA, with a bit of ingenuity, stands for "Prostate cANcer graDe Assessment". The research team from the Radbound UMC and Karolinska Institutet identified a few interesting problems they wanted to solve.

The problems

Pathologists grade prostate biopsies according to the Gleason scale. Because this is done by different pathologists, the diagnoses are variable, just as in other medical specialities.

A possible solution is implementing AI algorithms to improve the accuracy and speed of grading. However, they're often developed and validated by the same researchers, which prevents them from being implemented into clinical practice. See the problem here?

One of the ways to develop them is through competitions, which markedly increase the speed of development, but are frequently not independently validated and reproduced.

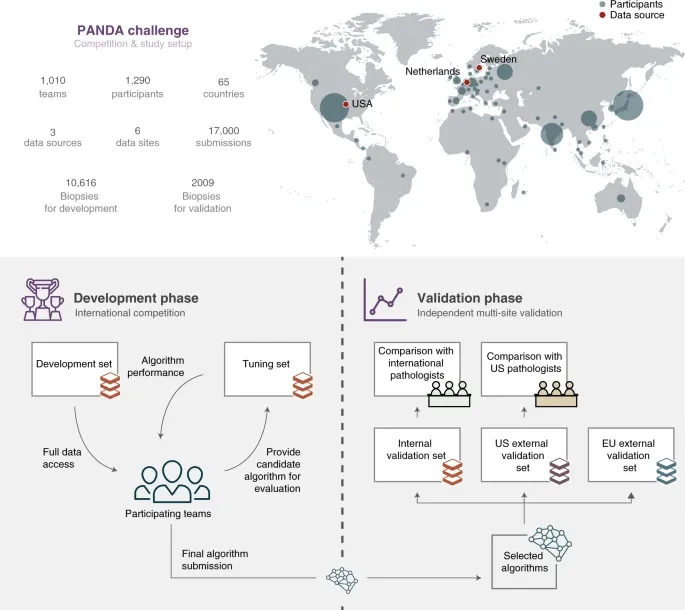

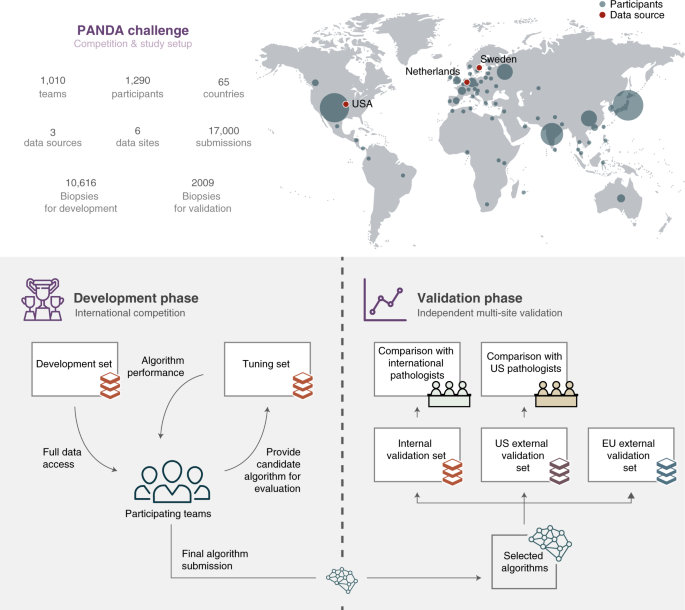

The competition

For those reasons, the research team released almost 11,000 images of prostate biopsies to the public and recruited more than 1000 teams from around the world. It was one of the largest such competitions to date. In total, the algorithms made more than 32 million predictions, which showcases how useful it is to involve the community. Pathologist-level diagnoses were achieved in all but 10 days.

Algorithm development and validation

During the development phase, the teams trained the algorithms using a tuning set of images provided by the researchers. Afterward, the research team chose the best performing ones to enter the reproduction and validation phases.

In essence, they used 3 sets of images with reference standards prepared by experienced (uro)pathologists. And in the case of the US validation set, they even used immunohistochemistry to confirm the diagnosis. Additionally, they also asked some international and US pathologists to diagnose the same images as the AI did and compared their diagnostic accuracy.

Findings

The findings were astonishing. These community-developed AI algorithms could identify and classify tumours as well as pathologists. In some cases, their accuracy even exceeded the pathologists. And compared to previous similar papers, they performed even better and were just as good on unseen data (external validation set).

Limitations

On the other hand, this paper showcases that it's not all about the algorithms. In clinical practice, doctors examine multiple biopsies of the same tissue (and also take into account the clinical picture), which weren't included in the paper.

Also, the training was limited to adenocarcinoma, which means we cannot assess the performance of algorithms on other types of cancer. Furthermore, the reference standards were set by a single pathologist and the evaluation was based on predominantly white countries.

This summary is based on research from the article Artificial intelligence for diagnosis and Gleason grading of prostate cancer: the PANDA challenge by Bulten, W., Kartasalo, K., Chen, PH.C. et al. in Nature Medicine. The original work is freely available and licensed under the Creative Commons Attribution 4.0 International License. Adaptations and summaries are provided by Medical Notes and are not endorsed by the original authors or publisher.